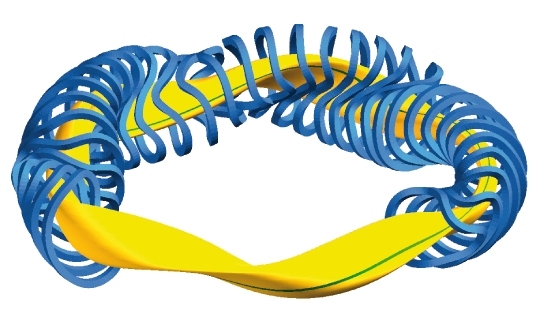

Wendelstein 7-X stellarator fusion reactor. (click for credit)

Nuclear power has always presented the possibility of cheap, nearly unlimited electricity. So far, however, the reality has not lived up to the expectations, because we are using the wrong nuclear process. We have mastered using nuclear fission, which is the process by which large nuclei (like certain isotopes of uranium and plutonium) are split into smaller nuclei. This produces a large amount of energy, but it also produces a large amount of radioactive waste. In addition, the reaction can get out of hand, as we have seen in Chernobyl and Fukushima.

However, some countries have utilized nuclear power well. France, for example, currently gets 75% of its electricity from nuclear power. But even France is transitioning away from it, as it is planning to produce only 50% of its electricity that way by the year 2025. With the high-profile disaster at Fukushima and the problem of radioactive waste, it is understandable why even France is trying to move away from nuclear power.

If we could only master the opposite process, nuclear fusion, we wouldn’t have such problems. In fusion reactions, small nuclei (like certain isotopes of hydrogen) are combined to make a larger nucleus. If the nuclei are small enough, this also produces energy. The nice thing about nuclear fusion is that the byproducts are not radioactive, and the reaction is easy to stop. Indeed, it is hard to keep the reaction going! In addition, hydrogen is more abundant than uranium and plutonium.

Why don’t we use nuclear fusion to produce electricity? Because it’s harder than it sounds. Nuclei are positively charged. When you push two positively-charged things together, they repel one another. The closer they get, the more strongly they repel. In order to get two nuclei to combine, they have to get really close together. It takes a lot of energy to make that happen, and so far, the energy we spend trying to force it to happen is more than the energy we get from the reaction.

We know it’s possible to get more energy from the reaction than it takes to get the reaction started, because the sun produces its energy via nuclear fusion. However, the sun has a massive gravitational field that can get the reaction going. We can’t use gravity to start the reaction, so we have to use some other form of energy. One possibility is through the use of lasers. However, that technique isn’t working nearly as well as had been hoped. The other possibility is through the use of magnetic fields.

In this process, called magnetic confinement, a hot sample of hydrogen plasma is injected into a reactor that uses magnetic fields to squeeze the hydrogen nuclei together. Billions of dollars have been spent in trying to get this process to work. While progress has been made, no such reactor has yet been able to get as much energy out of the reaction as it has put into the reaction. In other words, no magnetic confinement reactor has yet “broken even” in terms of energy.

One difficulty that magnetic confinement faces is the tendency for plasma to “leak out” of the magnetic field, due to physical issues associated with the shape of the nuclear reactor. This has been known for quite some time, so in the 1950s, Dr. Lyman Spitzer designed an oddly-shaped magnetic confinement reactor that reduced such tendencies. This kind of reactor is called a stellarator, and its odd design is illustrated above. The stellarator built by Spitzer in 1951 at Princeton worked well. It didn’t break even by any means, but it was able to get nuclear fusion to occur.

The stellarator design was abandoned, however, because a simpler design (called a tokamak) showed some very promising initial results, and the simplicity of the design (at least compared to a stellarator) was quite attractive. As a result, tokamaks have been the focus of magnetic confinement fusion research for the past 40+ years. I remember sitting in the national American Chemical Society meeting back in 1990 and listening to a presentation that assured us the tokamak design would reach break even by 2010 at the latest. That never happened.

Because the tokamak design has not lived up to its initial expectations, some researchers have gone back to the stellarator design. While it is a lot more complex, it does have the potential to produce fusion that lasts for a longer time, because the magnetic fields contain the plasma better. This should produce more energy for roughly the same initial amount of energy required to get the reaction going, so a stellarator might be able to do what a tokamak has so far not been able to do.

Well, the first test of a modern, large-scale stellarator reactor recently happened, and it was a success! Now don’t get too excited. It was a first test. It didn’t even use hydrogen as the fuel. Instead, it used helium. Why? Because it’s easier to make a plasma from helium than it is from hydrogen. In addition, it didn’t try to make energy. The researchers just wanted to see if the design could hold a plasma as expected, and it did!

The researchers plan on working out how to make the best plasma they can for the reactor, and then they will switch to using the proper fuel, hydrogen. At that point, they will start detailed measurements of how much energy is put into the reaction and how much is produced by the reaction. They expect such experiments to start within a year or so. It will be interesting to see how they go.

The possibility of cheap, clean, nearly unlimited power is exciting, but I also find this turn of events rather interesting. After I listened to that American Chemical Society presentation in 1990, a group of us went out to dinner. I asked a my colleagues (most of whom were much more experienced in the field) why the stellarator design was not being pursued, as it seemed superior to me. I was told that the stellarator was “old technology” and that the tokamak design was the future. After investing 40+ years and billions of dollars into “new technology,” some nuclear scientists are seeing the advantages of the “old technology.”

Newer isn’t always better!