Unfortunately, science often does not follow common sense. I use quantum mechanics in my field of research all the time, and it violates common sense at every turn. Nevertheless, I am forced to use the theory because the data strongly support it. Thus, even though “it makes sense” that rising carbon dioxide levels will increase the earth’s temperature, we don’t know that for a fact. Indeed, the majority of the data have consistently shown that this is not the case. Several analyses of ice-core data show that on long time scales, the average temperature of the earth rises, and then carbon dioxide levels rise (see here, here, here, and here).

Now, of course, all these studies use proxies to estimate global temperature, and that can be tricky. In addition, producing the time scale involves making several unverifiable assumptions. Thus, I have never put much stock in such studies. However, others who are interested in climate change (aka Global Warming) take these data seriously. They generally say that these long-term trends are showing the effects of changes in earth’s orbital cycle, which changes the energy it gets from the sun. Thus, they aren’t relevant to what is happening right now. Also, there are at least some ice-core analyses that show carbon dioxide rising before temperature does. In other words, it’s complicated.

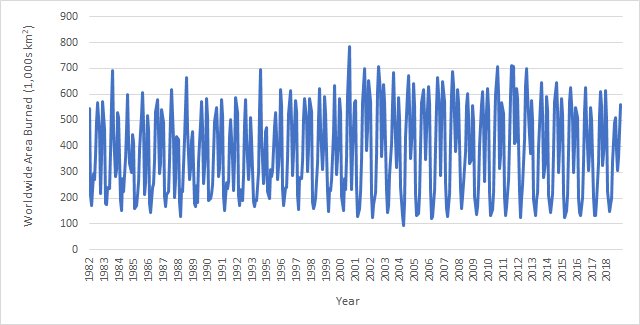

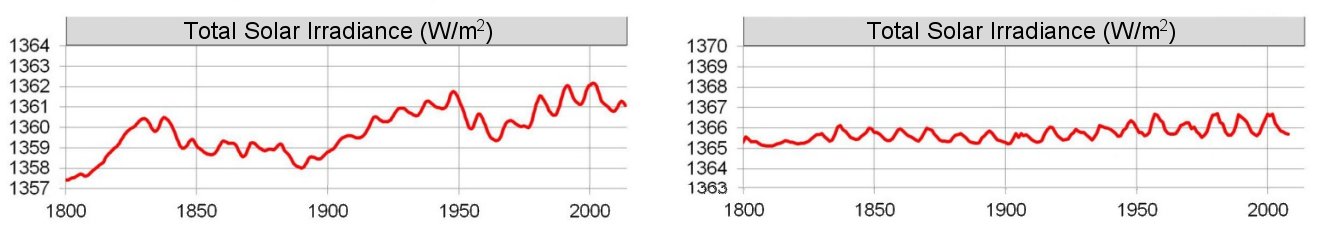

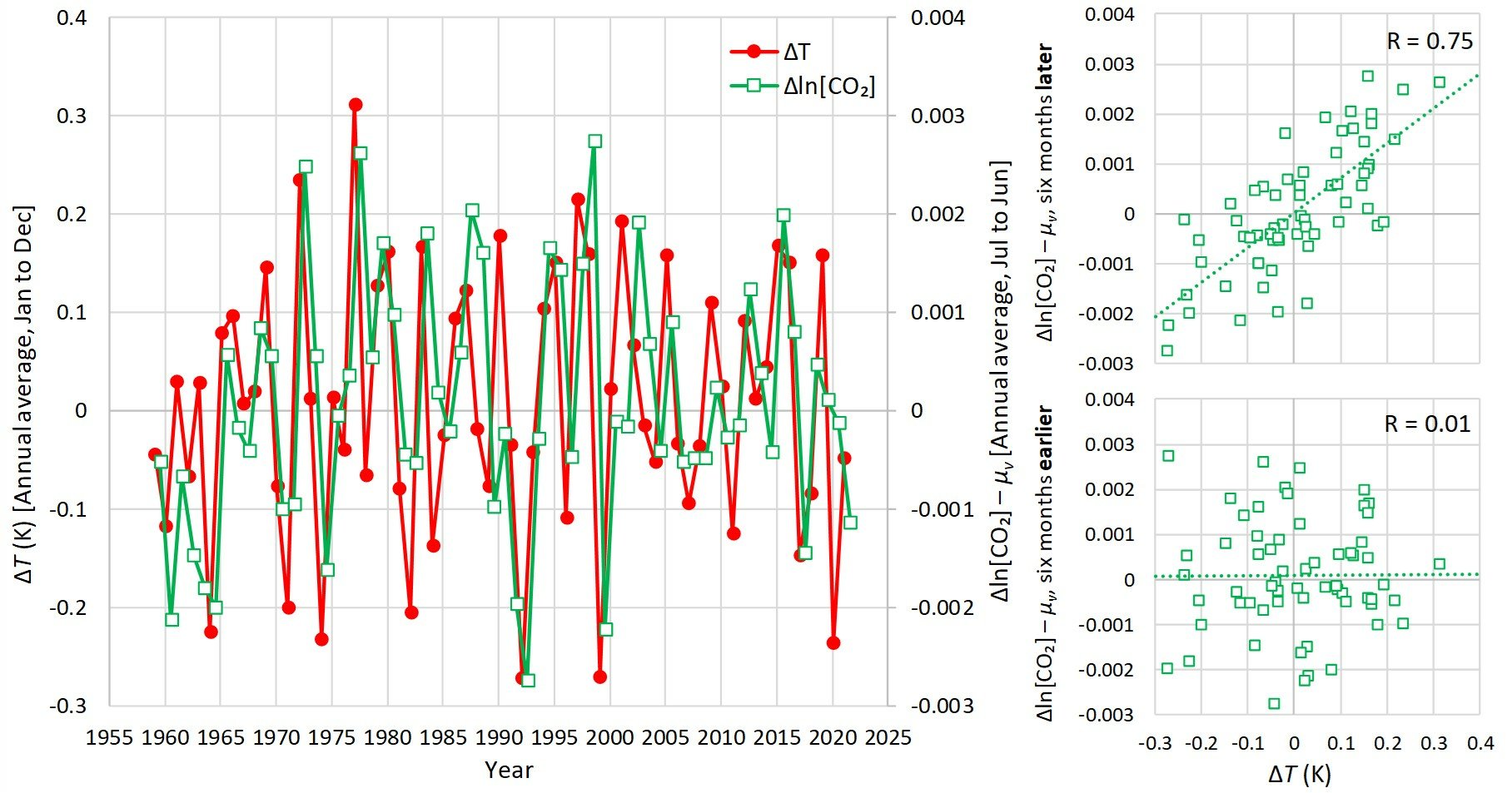

However, a recent study (which actually builds on two previous studies) looked at the modern data that has been collected for carbon dioxide levels in the atmosphere and global temperature. In other words, it analyzed what is happening right now. Of course, there are several sets of data for global temperatures, and they don’t really agree with one another, but the authors used a well-accepted one. What they found is that even on this relatively short time scale, carbon dioxide levels rise after temperatures rise. In fact, here are three graphs from the abstract:

From the graph on the left, it is clear that temperature (red line) rises first, then carbon dioxide level (green line) rises. The other two graphs show this even more convincingly. On those two graphs, changes in carbon dioxide and temperature are only correlated with one another if you consider the change in carbon dioxide level after the change in temperature, not before.

But wait a minute. How can temperature affect carbon dioxide level? Well, one of the major places the earth stores carbon dioxide is in the ocean (and, to a lesser degree, in fresh water). When temperatures go up, carbon dioxide becomes less soluble, so the oceans release carbon dioxide. From that point of view, it “makes sense” that rising temperatures will cause rising carbon dioxide levels. But once again, science doesn’t always make sense. Thus, it’s probably very complicated. Most likely, rising temperatures cause rising carbon dioxide levels, and those rising carbon dioxide levels cause more rising temperatures.

Which is more important? If you trust this study, it’s the former. In their appendix, the authors estimate that the increase in atmospheric carbon dioxide levels caused by increasing temperature is three times greater than the increase in carbon dioxide levels caused by human emissions. Now, of course, that still means human emissions increase temperature. However, it also means that (not surprisingly) the global climate models aren’t properly taking this into account. As a result, global climate models are exaggerating humanity’s contribution to global warming. While I think that has already been well-established, this study gives at least one of the explanations for it.