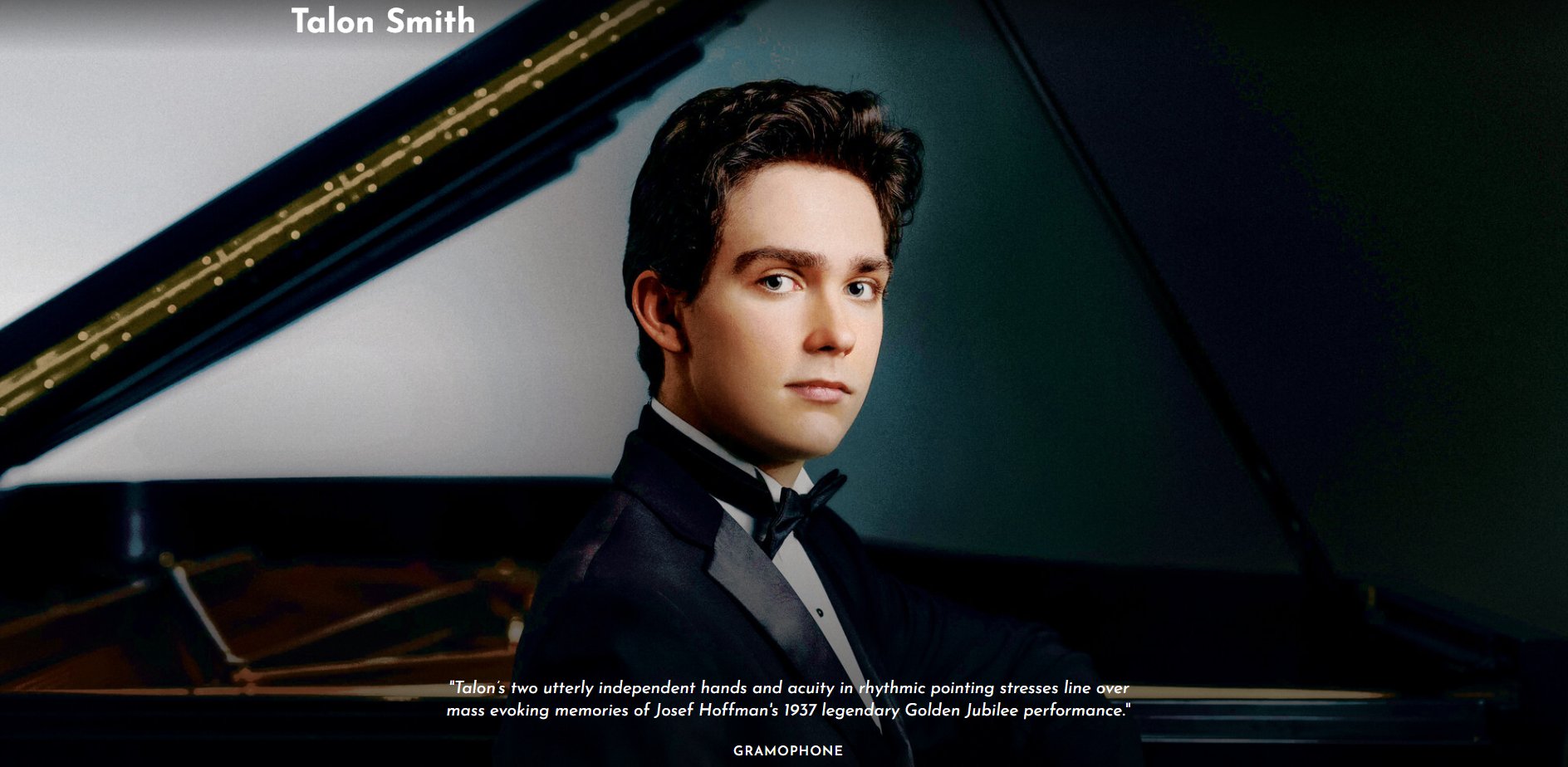

“Talon’s two utterly independent hands and acuity in rhythmic pointing stresses line over mass evoking memories of Josef Hoffman’s 1937 legendary Golden Jubilee performance.”

(click for source)

During the 2018/2019 academic year, I had a physics student named Talon Smith. He was excellent (A’s on all the assignments), but he frequently missed class. His mother would warn me ahead of time, saying that Talon had a piano concert or recital at which he had to perform. Since he was clearly learning the material, I didn’t worry about him missing class so much. Well…several years later I was scrolling through my Facebook feed and saw a post from Talon Smith Music (I try to play the piano, so I get random piano-related posts). The post linked to an incredible video of a pianist playing four Chopin pieces at the 18th Chopin competition in Warsaw, Poland. The name rang a bell, but I thought, “That can’t be the student I had, can it?” Well, I looked up my old records, found his email address, and matched it to the Facebook page that made the post. I suddenly realized that he missed my physics classes so that he could perform at world-class music events!

I was able to catch up with Talon in the New Year, and our discussion was fascinating. He started taking piano lessons when he was 5 years old. He says his very first teacher was the perfect fit, because he did not confine Talon to a regimented way of teaching. Instead, he taught Talon as an individual, which nurtured Talon’s love for music. When Talon moved on to another teacher (at the age of 9), the new teacher noticed his obvious talent (and love for the music), so the teacher entered him into a competition. He started doing more competitions, and at the ripe old age of 13, his big break occurred. His teacher at that time encouraged him to enter The Gina Bachauer piano competition, which is international. He was hesitant, but he practiced for the audition for six weeks, and based on that audition, he was accepted into the competition (in the age 11-14 category). By the time the actual competition came around, he was 14, and he won his age category. He says that he was very surprised to have won, but decided that he was very grateful for the process, from which he learned a great deal.

He has continued to perform in concerts and competitions around the world, and has continued to receive accolades for his work. You can find several videos of his amazing performances by searching his name on YouTube. My favorite comes from the 17th Arthur Rubinstein Competition, where he plays 24 pieces of his own composition. I have purchased the sheet music for those pieces, because I think I might be able to play two or three of them (with a lot of practice).

Here is how he sums up his piano career so far:

There are a lot of pianists who probably work harder than me, but so many things have gone right for me. Not because I deserve it, or because of my efforts, but God has given me some great opportunities and great people in my life. My mom manages me and is the single most important person for me accomplishing what I have accomplished.

God willing, he is looking for a long and successful career in music. However, he primarily sees what he is doing as a way to enjoy music while glorifying God. He makes it clear that he doesn’t think he needs to be playing sacred music to glorify God. He says:

The Bible says to do whatever you do for God’s glory. So music doesn’t necessarily have to be sacred to glorify Him. What glorifies God most is excellence – trying to reflect the excellence of God’s character and His nature in what you do. That can speak louder than what is actually being done.

I wholeheartedly agree. Whether you are playing a Beethoven sonata or doing a physics experiment, you glorify God by doing it in an excellent way!

So why does he say that his mother is “the single most important person” for his accomplishments? First, she helped to developed a love for music in him by playing classical music in the house, even while he was still in the womb. Second, she provided him with a balanced homeschool education. Throughout his K-12 homeschooling experience, he took all the standard courses that students take, as well as more difficult courses (like physics) that some students avoid. Nevertheless, by homeschooling him, she gave him the flexibility he needed. He could concentrate on the academically rigorous courses at times when he wasn’t consumed with practicing for a competition or concert. In the end, he doesn’t think he would be as accomplished a pianist if he hadn’t been homeschooled.

I want to end this with one of the most important things Talon said, at least from a musical perspective. He said that when he started out doing competitions and concerts, he was focused on not making any mistakes. However, as he matured, he began to agree with a quote that is often attributed to Beethoven:

To play a wrong note is insignificant; to play without passion is inexcusable.

While Beethoven probably never said that particular phrase, one of his pupils indicates that he would agree with the sentiment. Talon said that when he doesn’t focus on mistakes and instead just focuses on enjoying the music, he likes his own playing much better. For someone like me (who often plays like he is wearing mittens) that’s a comforting thing to consider.

As I mentioned in my

As I mentioned in my